July 17, 2019

Here’s a discussion that we had about how to deploy models to be used in an AI application.

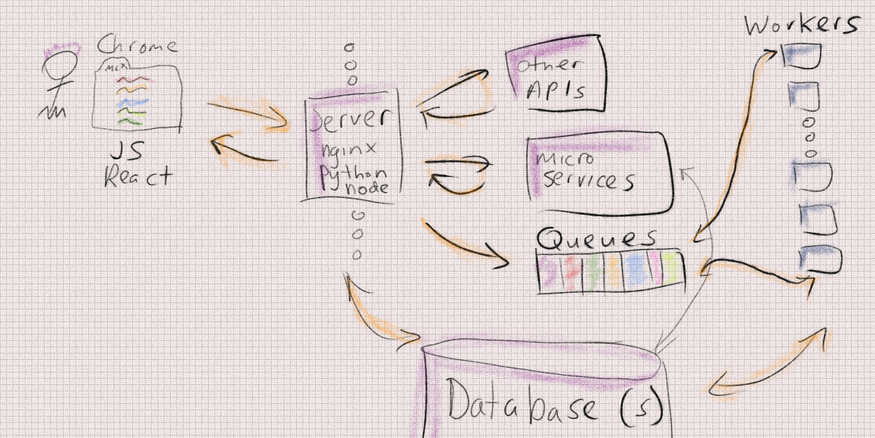

The thought process follows a pattern of providing a web interface of some sort with the requests being queued up and handled asynchrounously by a server running an AI model.

Agenda

- Welcome & Introduction

- Project Updates

- Deploying AI Models

- Plan for next week

Deployment Use Case

Let’s start by thinking about this use case from the Bug Analysis Project

After we train some sort of model, how do we make it available for the masses?

Think about something like this:

How would we build this?

What about updating the model after you deploy it?

References

Train and serve a TensorFlow model with TensorFlow Serving

There are two very different ways to deploy ML models, here’s both